Event driven applications with Serverless Knative Eventing - Introduction

In this module you learn how to leverage streams for Apache Kafka and OpenShift Serverless (Knative Eventing) to build an event driven application, without having to deal with the underlying messaging infrastructure.

Business context

In today’s fast-paced digital landscape, businesses collect vast amounts of data through customer interactions, product sales, SEO clicks, and more. However, the true value of this data lies in the ability to drive business intelligence from the data gathered. In this module, you will see how an event-driven architecture can leverage the capabilities of an AI/ML engine to unlock the true potential of data and transform it into valuable business intelligence.

Globex, the fictitious retail company, wants to extend their eCommerce website to allow customers to leave their product reviews. Globex would like to

-

Moderate the language comments to ensure foul language is appropriately filtered out

-

Build a Sentiment Analysis system to understand the sentiment behind each of the product reviews

Technical considerations

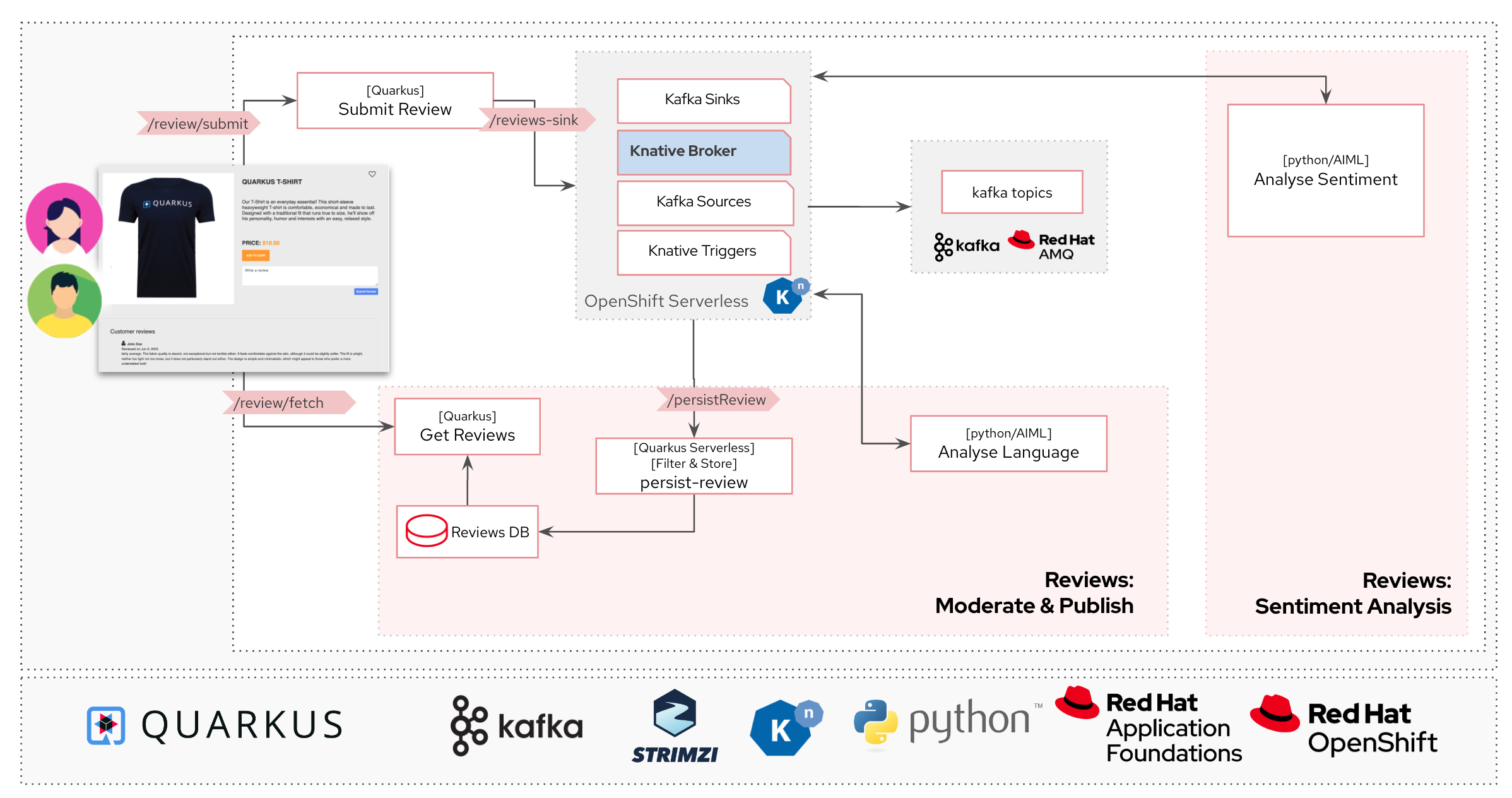

Globex decides to adopt an Event Driven Architecture using Apache Kafka as the data streaming platform for product reviews and OpenShift Serverless Eventing. The Product Reviews submitted by a customer are pushed to a Kafka topic, which is then consumed by both a Review Moderation Service (moderate for foul/abusive language) and Sentiment Analysis Service (scores the sentiment as positive or negative).

The data flows in and out of different systems through the OpenShift Serverless Eventing architecture which uses brokers, sources and triggers to build a scalable, fully decoupled system.

Once a review is moderated and marked as suitable, this is persisted in the Globex Product Review DB (PostgreSql) to be then shown on the Products page. As a first step, the Sentiment Analysis score can be viewed in the Kafka messages and at a later time will be used to build a Dashboard (Grafana) to view how well a particular category of products is performing over different time periods.

Here is an overview of some of the critical components which are brought together to build this system.

Red Hat streams for Apache Kafka

Red Hat streams for Apache Kafka will be your core event stream platform where the review comments are stored and propagated to other systems and services to moderate and analyse them.

Red Hat OpenShift Serverless

Red Hat OpenShift Serverless, built on Knative, makes it easy to build serverless and event-driven applications on Kubernetes. It has two main components:

-

Serving supports autoscaling, including scaling pods down to zero.

-

Eventing enables event-driven architecture which can route events from event producers (known as sources) to event consumers (known as sinks) that receive events. These events conform to the Cloud Events specification.

|

Cloud Events makes it easy for the services and systems to interact with each other in a standard way. A Cloud Event has a standard structure with attributes such A sample Cloud Event looks like below: |

Architecture

The architecture uses Kafka for messaging, Knative for event driven architecture, and AI based Knative Services for processing the reviews.

-

The user submits a review via the Globex product page.

-

The

Reviews Service APIreceives the review, and emits a CloudEvent to the Kafka Event Sink via HTTP POST. -

The KafkaSink persists this incoming CloudEvent to a suitable Apache Kafka Topic.

The KafkaSink is a type of Event sink to which events can be sent from a source (such as apps, devices) -

The Knative KafkaSource reads the messages stored in Kafka topics

KafkaSource sends the Kafka messages as CloudEvents to a Knative Broker. -

The Knative KafkaSource then sends messages as CloudEvents through HTTP to the Knative Broker for Apache Kafka.

Brokers provide a discoverable endpoint for incoming events/messages, and use Triggers for actual delivery. -

The configured triggers filter the events from the Broker based on event type.

Triggers subscribes a specific broker, filters messages based on CloudEvents headers, and delivers them to Knative services' -

Events of

type: submit-revieware routed to the appropriate AI based Knative Services. -

After processing the messages, the AI Services send new Cloud Events back to the Kafka Sink with details of review rating and moderation results.

-

The Kafka Sink writes the new messages back to appropriate Kafka topics.

-

KafkaSource reads these new services generated messages from Kafka.

-

The new messages are then routed to the Knative Broker again.

-

The Trigger with filter

type: persist-reviewgets activated. -

The moderated reviews are then sent to a Review Persistence Service

-

The

Persist Reviews Servicethen saves the review in the database.

These reviews are finally fetched and displayed on the product page.

Implementation

In the next chapter, you will be guided through the implementation and deployment of this solution. Since this solution involves way more than what can be achieved during a workshop, a number of components are already in place. And you will focus on a few key activities which will give you a good understanding of building an event-driven application.

Proceed to the instructions for this module.